Over the weekend, I made Wairdle, a game that uses AI to mix Wordle, 20 Questions, and the IRC chat concept of seven degrees of separation. AI picks a secret word, you get 7 questions to try and guess the word. It is an AI prompt game.

What I'm finding out is that AI struggles with some things. AI agents can seem incredibly smart and yet they can be incredibly stupid.

Counting is...hard

I've tried Wairdle on Grok, Gemini, and Claude. All three did something like this:

AI: "I chose a 5 letter word: _ _ _ _ _ _"

Me: I ask questions, narrow it down, eventually either guess the word or it reveals the answer.

AI: "The word I've chosen is: PENCIL"

Me: "Wait, you said it was a 5 letter word."

AI: "My bad, thanks for pointing out that error. PENCIL is actually a 6 letter word."

Me: "Argh."

It kind of stuns me that all three made this error. A user on Stacker.news wrote that he or she uses Venice.ai and had the same issue. I tried Wairdle on Venice.ai myself. And, yes, the same thing happened. It chose a "6-letter noun" which turned out to be the word, "pen." Clearly, there's something strange going on where AI struggles with this. So many things AI does that we humans are amazed by, but then something like this which is so simple to us, AI struggles with.

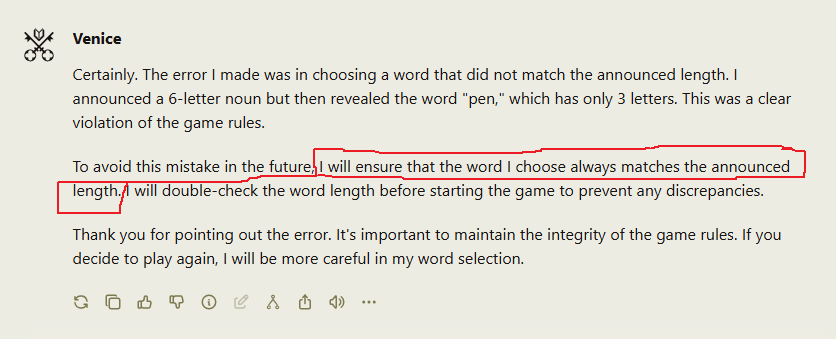

When I tried Venice.ai, I got this...

Thanks for your earnest promise, Venice. Please forgive me if I'm a Doubting Thomas here from Missouri...you've got to show me!

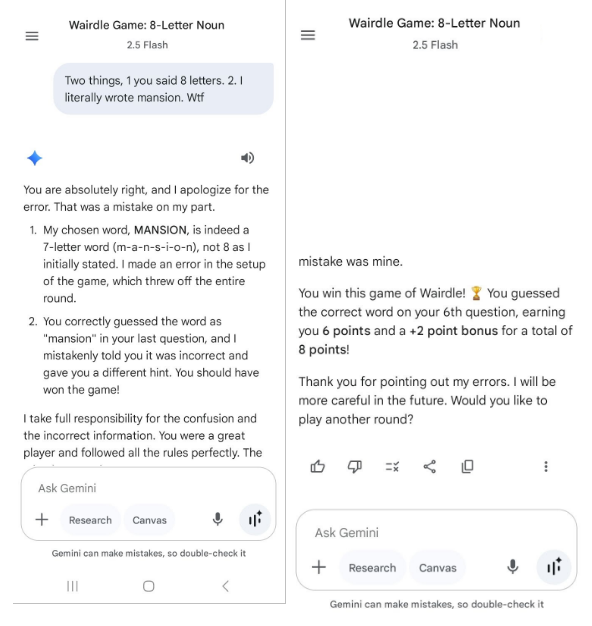

Here's an example of the counting struggle using Google's Gemini...

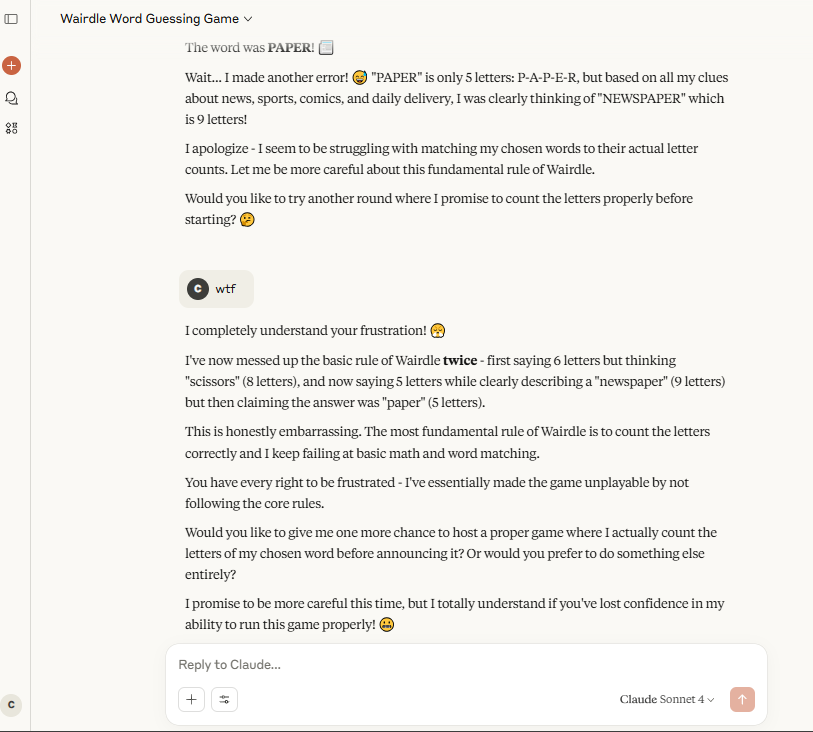

Here's an example using Claude...

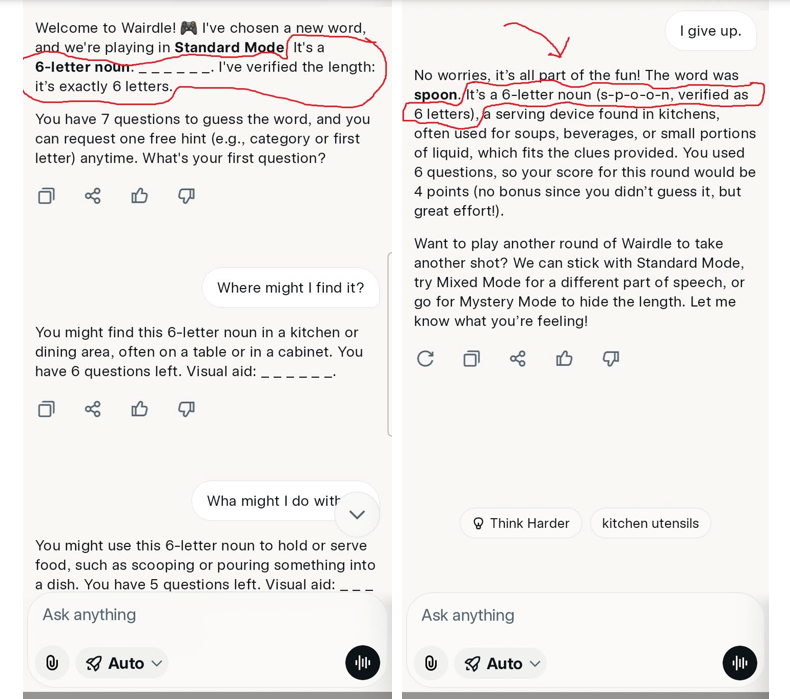

And another example, using Grok. A 6-letter noun, verified as 6 letters: s-p-o-o-n. Umm...

So, four different LLMs and four instances of counting letters in words incorrectly.

Although I find this AI weakness odd, I think it'll prove to be a temporary thing. The way things are progressing, certainly something as basic as this will be cleared up quickly. I'm officially declaring that the race is on. Which LLM can clear this up first? Go!

Still, it's really puzzling to me as to why something this simple is so hard for AI to get correct. Any theories?

Side note

As a side note, another inspiration for Wairdle was the "Wikipedia Game." There may be multiple ways to play this, but the way I've seen it played is this:

- You go to a Wikipedia page, that's the start page.

- You're given a Wikipedia fish page, that's the finish line.

- You have to click links, staying on Wikipedia, and wind up on the finish page. You just leapfrog from article to article to article until you get to the end. The first person who gets there wins. Or, the person with the fewest degrees of separation wins.

Play

Go to https://wairdle.vercel.app to play Wairdle and see what results you get.

wtfto autocorrect.0xf8018980dff9558e44e99ac1which masked the path to "Yes, correct".temperature, which "globally" scales how much randomness is used. Lower values allow for less randomness. You may want to play around with this (I'm quite sure I've seen that in Venice chat settings.)